Mimi Voice Clarity is one of the latest editions to our product offering, helping headphone manufacturers deliver clearer, more intelligible speech experiences to their users. Built specifically for headphones, it enhances real-time speech clarity, making conversations easier to follow even in noisy or challenging environments.

We spoke with two members of the Mimi team, Peter Möderer, Product Director, and John Usher, Lead Sound Engineer, to dive into how Voice Clarity works and why it’s becoming a key differentiator in the competitive headphone market.

What is Mimi Voice Clarity?

Voice Clarity is really about making conversations easier to follow - especially when you’re in a noisy place. It uses AI to preserve the sounds you actually want to hear, like someone talking to you or an announcement, while reducing the background distractions, which could be things like wind, traffic, keyboard clicks, or a room full of people chatting.

It can even help reduce the level of sound coming from behind you, acting like a kind of sound bubble that just allows the passing through of speech in your immediate surroundings. So for headphones companies, it’s a powerful way to help users stay connected and engaged, no matter where they are.

What kinds of devices or use cases is it designed for?

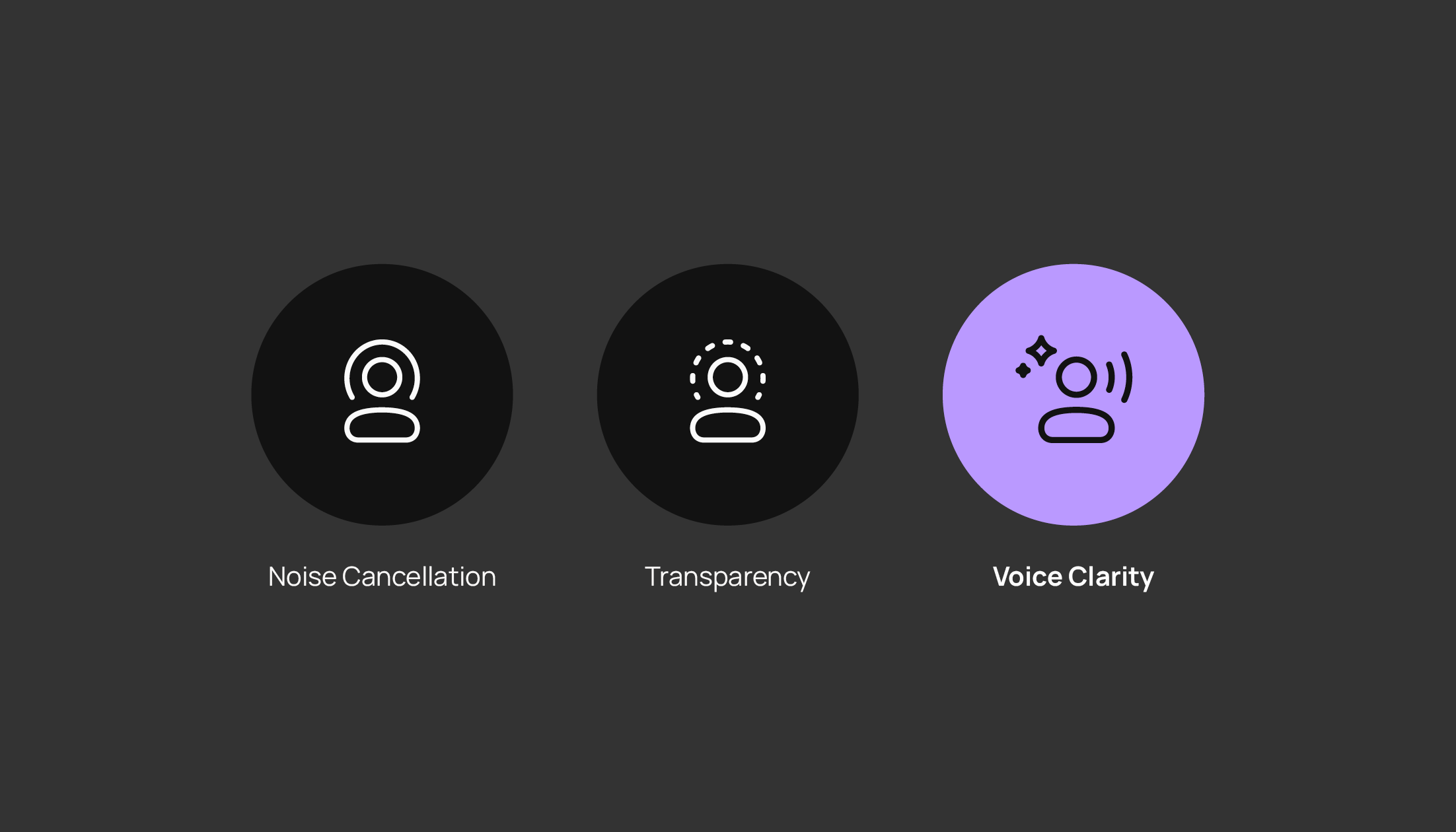

Voice Clarity is a next-generation transparency-mode currently designed for headphones, which takes specific signals of interest into account, going beyond the traditional and existing transparency modes.

Existing transparency modes help you to be aware of your surroundings while wearing your headphones. For example, this mode can help you navigate in traffic, as it is important to be able to recognize an approaching car and to generally not be fully isolated from the world around you

Voice Clarity however goes a step further. Instead of just letting in all the outside sounds, so you’re more aware of your surroundings, it actually focuses on helping you to understand speech and conversations better while wearing your headphones.

So, imagine you’re wearing your headphones and someone starts talking to you. With Voice Clarity, you don’t have to take them off to follow the conversation, and you can use the feature in any environment where you feel you might need a bit of extra support to understand what is being said.

It’s also very helpful specifically for people with mild to moderate hearing loss. A lot of people can struggle to follow speech in real-world situations, and as research shows, not being able to follow and engage in conversations will lead to secondary effects such as social isolation. This kind of technology helps to bridge that gap, and at a fraction of the cost of traditional hearing aids or assistive devices.

What’s happening technically behind the scenes?

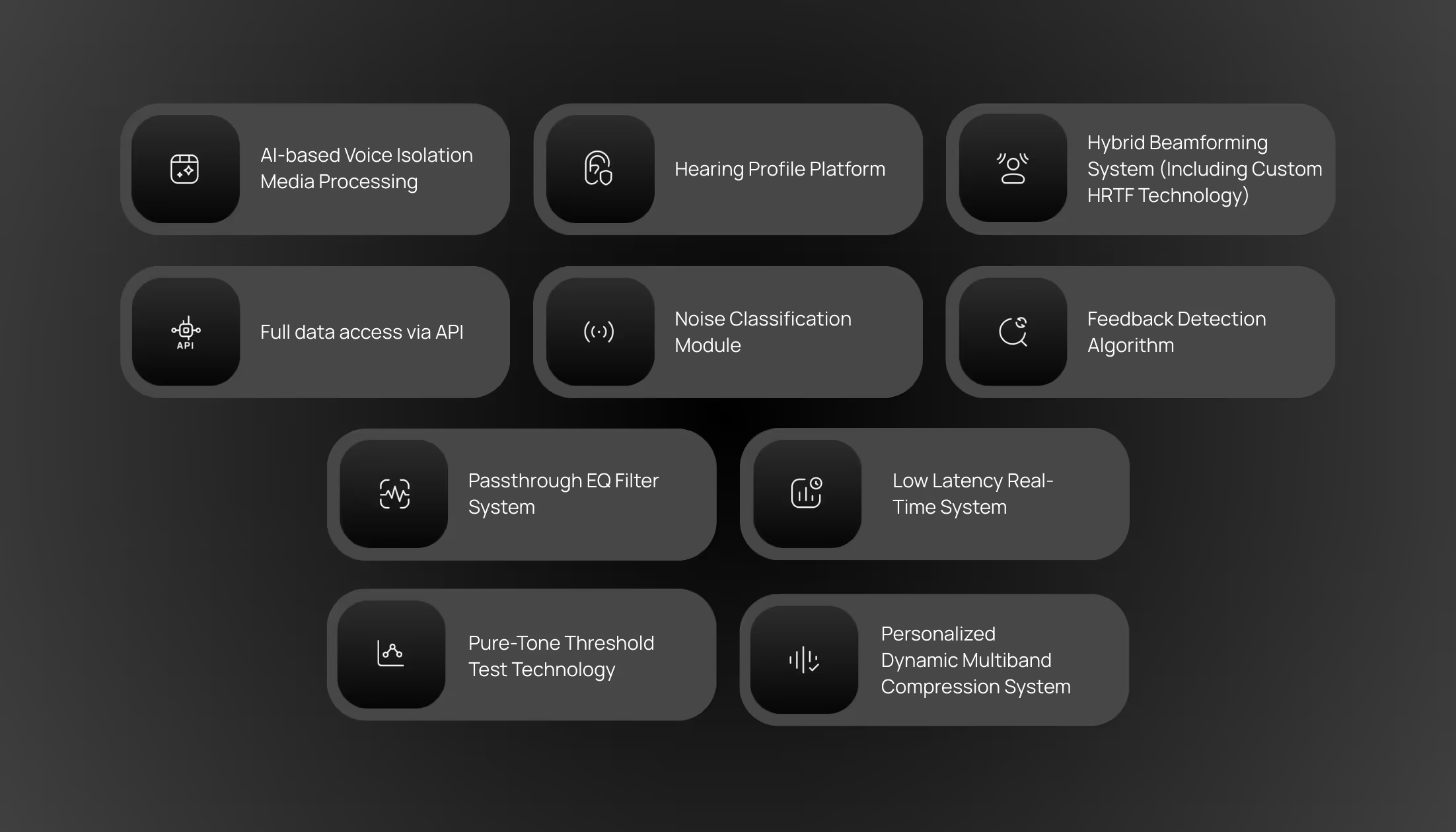

There’s quite a bit going on under the hood to make the product work the way it does. It’s not just one feature, it’s really a combination of smart technologies working together in real time to enhance speech.

First, there’s something called beamforming, or directional enhancement. Basically, the system knows to focus on the voice coming from in front of you, like the person you’re actually talking to, and it reduces sounds coming from other directions. So, instead of picking up every little noise around you, it locks in on what matters.

There is also AI-based denoising. Once the direction is set, it is important to remove the noise and only focus on speech-signals. While denoising-algorithms and speech-enhancement-EQs have been on the market for a long time, the benefits and opportunities given by AI are tremendous. It helps to effectively remove noise from the signal and also react quick enough to cut out onset-/transient signals (such as crockery-jingle). These sorts of signals can be very annoying in traditional speech-assistance algorithms, which work with a certain level of gain, and this would always lead to overamplifying specific signals.

Another important piece is adaptive feedback cancellation. As the goal is to remove noise and then amplify voice to the desired level, it is important to not generate feedback/howling, which is common for small devices such as TWS hearables. In the past, each processing step was simply chained and more or less isolated, but in times of AI it’s possible to achieve results which were unthinkable two years ago. So for example noise isolation and feedback cancellation need to go hand in hand and are trained together based on specific device characteristics.

Also, once all the signal has been cleared from noise, we tailor it to each listener’s individual hearing ability. You just need to take a short hearing-test, and with our scientific fitting-algorithms a sound profile is generated which then adapts the signal to your hearing, further improving intelligibility of the speech.

How does Voice Clarity improve accessibility and usability for users with mild to moderate hearing loss?

For users with mild to moderate hearing loss, everyday situations, like chatting in a café or catching an announcement in a busy train station, can be surprisingly challenging. That’s where Voice Clarity really shines. It’s designed to make speech stand out from the background so conversations feel more natural and less tiring to follow.

One of the big advantages is that it works inside the headphones people are already comfortable wearing, so there’s no need for a dedicated hearing device. This lowers the barrier for adoption, both in terms of cost and in terms of social perception, because it doesn’t feel like ‘medical equipment,’ it just feels like better headphones.

By making voices clearer in real time, Voice Clarity helps people stay engaged in the moment. They don’t have to constantly ask for things to be repeated or withdraw from noisy situations. And that’s not just about convenience. It's about improving confidence, connection, and quality of life.

How does Voice Clarity complement or work with other existing audio features on headphones?

Voice Clarity fits right alongside the other audio features you find on modern headphones. As mentioned earlier, we see this as the next-generation transparency-mode, and a third core mode in the context of “ambient-features” on headphones. These three modes being ANC, Transparency, and Voice Clarity.

What makes it different is that it can be used for more specific scenarios. ANC and Transparency are designed for long-term use, and you might leave them on for your whole commute or work session. Voice Clarity, on the other hand, is something users can activate when they need to engage in a conversation or hear someone speaking clearly in a noisy setting. It’s there when you need it, and easy to toggle off again once you’re done. Currently the mode can be toggled on and off the same as ANC or transparency, via a mobile-app interaction or button directly on the headphones.

From a headphone partner’s perspective, what does the integration process look like? Is this available as a software module, SDK, or part of a broader suite?

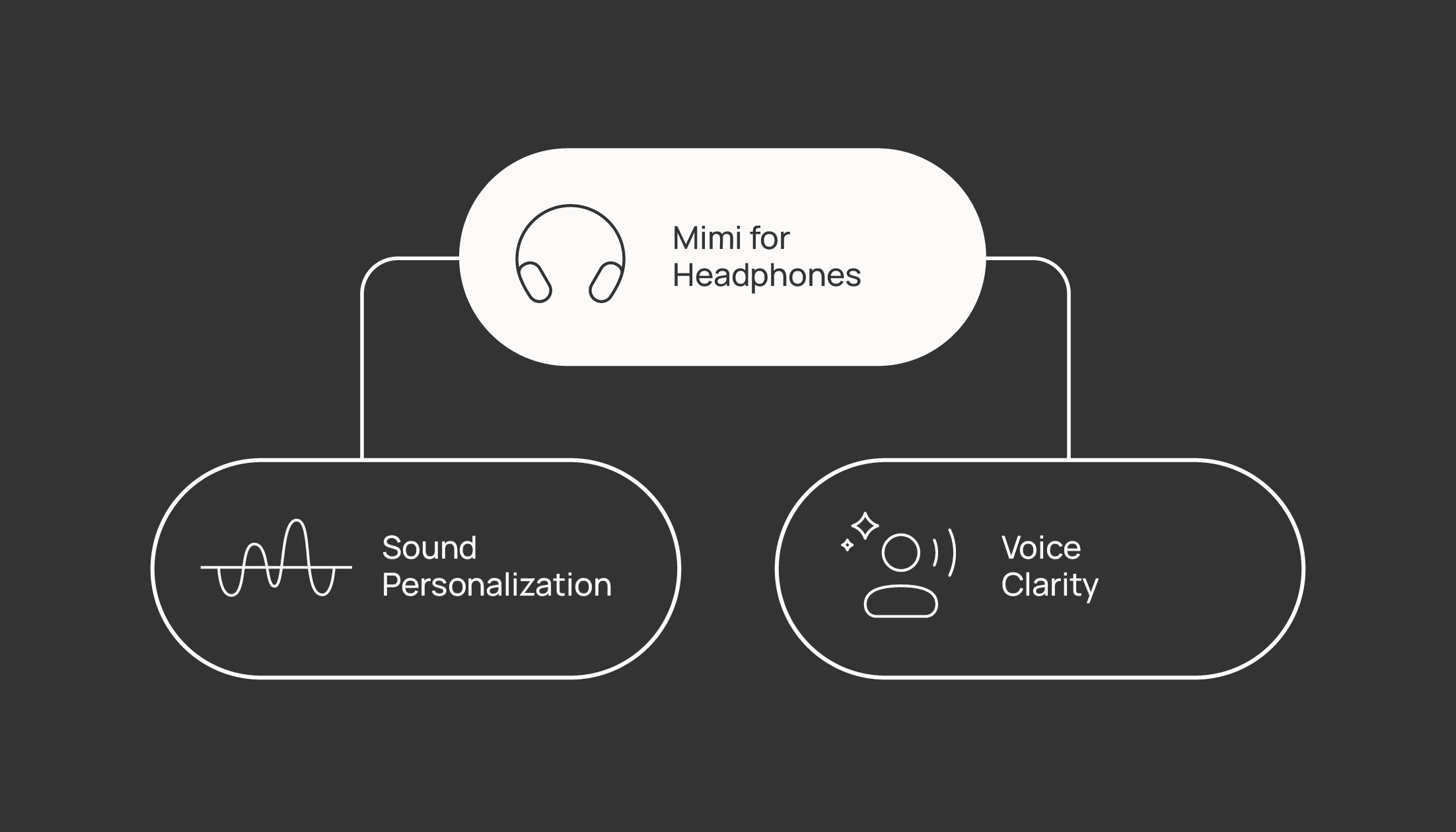

It is part of our broader Mimi for Headphones offering, which includes both Voice Clarity and Sound Personalization. Sound Personalization helps users hear better when they’re listening to music or taking a call, while Voice Clarity is focused on improving live, real-time speech. Each product can be integrated separately, or as a combined package.

Both are a part of our mobile SDK, which includes everything needed for integration, from APIs to a holistic set of screens. That means our partners can offer a seamless, branded experience inside their own product ecosystem, without having to build everything from scratch.

Are there any other upcoming features or innovations in the pipeline?

We are quite excited about where we are headed with automation. We are working on “Smart Scene Classification” that (amongst many other use cases) can intelligently detect when Voice Clarity should be triggered. Typical triggers would be once your own-voice is recognized, or when someone starts talking to you - specifically when a certain speech-signal is recognized towards you at a defined threshold that clearly indicates that you are now being addressed.

How do you see products like Voice Clarity helping to shape the future of consumer audio?

While this is only the beginning of the journey, and a change in societal perception must take place to have a conversation with earbuds in your ear (without people think you are being rude), we believe this is a great start into a new era for hearing health features being more readily available in everyday audio products.

As the lines between consumer audio and assistive tech continue to blur, features like Voice Clarity will no longer be seen as ‘nice to have’, they will become expected. People want devices that not only sound great but also support their real-world needs, from staying socially connected to navigating noisy environments more comfortably.

In the future, we imagine these kinds of features becoming more proactive, personalized, and integrated, working quietly in the background to enhance how we engage with the world. It’s not just about audio anymore, it’s about accessibility, inclusion, and smarter user experiences built into the products people already use in their day to day lives.

How can I learn more?

- Try the online demo: https://mimi.io/products/voice-clarity

- Book a meeting with the sales team: https://mimi.io/contact