As personalization features become increasingly common in smart glasses, premium audio devices, and hearing-health products, a key question is emerging: Does the choice of transducer affect how deeply listeners can perceive those algorithmic adjustments?

A new study from xMEMS Labs and Mimi Hearing Technologies sheds light on this topic, providing compelling insights and revealing why extended frequency range matters for the future of adaptive audio.

The study: Mimi Sound Personalization across two hardware platforms

The study compared Mimi Sound Personalization processing delivered through two transducer types:

- xMEMS's solid-state Montara+ drivers

- High-quality dynamic drivers

Two versions of Mimi's processing were offered to listeners: The "pure" version, which processes signals with the exclusive aim of compensating an existing hearing loss. The second "signature" version subtly balances the amplification across frequencies to minimize the experienced change in sound coloration, which is subjectively preferred by some listeners.

To quantitatively and objectively determine the perceived benefit of the xMEMS speaker driver and Mimi's processing, the study used MUSHRA testing, an industry-standard blind listening methodology where participants rate multiple audio versions side-by-side without knowing which technology they're hearing. Using earbuds, participants rated playback across five music genres selected for their harmonic complexity and spatial content.

All testing was conducted in controlled acoustic conditions with matched frequency response between drivers up to 12kHz, isolating extended high-frequency performance as the key variable.

Study findings: Combination of Mimi and xMEMS is preferred

The findings revealed a nuanced but important insight: Mimi's personalization was preferred by listeners on both driver types, but the perceptual impact was significantly deeper on xMEMS hardware.

With the extended high-frequency range enabled by the xMEMS drivers, listeners perceived the effects of Mimi Sound Personalization much more clearly and rated the experience considerably higher. Listeners also reported enhanced clarity, instrument separation, wider soundstage, and more defined transients with xMEMS drivers.

The rating data showed a balanced preference overall for "pure" versus "signature" versions of Mimi processing. Age, listening experience or musical genre did not explain the observed variance, ruling out these more trivial explanations for individual preference for either version.

![[lightbox]](https://cdn.prod.website-files.com/6818bfbe7d79e080f9ab7199/6957958bff87cc5654c02b99_xMEMS%20x%20Mimi%20Study%20Violin%20Plots%20Results.png)

Why extended frequency range deepens the impact of personalization

The study attributes the difference in participant reactions between dynamic drivers and xMEMS drivers to xMEMS’s stable, linear, extended high-frequency response above 12 kHz.

This spectral range is where Mimi’s high frequency processing targets critical perceptual cues related to clarity, spatial detail, and timbral accuracy.

"This study reveals that extended high-frequency capability gives our Mimi algorithms a wider frequency canvas to work across. When we target perceptual cues above 12kHz with the same precision we apply at lower frequencies, listeners perceive richer spatial detail, clearer timbral distinctions, and more nuanced personalization effects.” said Vinzenz Schönfelder, R&D Lead at Mimi Hearing Technologies.

![[lightbox]](https://cdn.prod.website-files.com/6818bfbe7d79e080f9ab7199/695795b4f003bc73a6fb37ad_Mimi%20HF%20Processing%20Filter%20Bank.png)

Traditional dynamic drivers typically exhibit rolloff and instability in the extended high-frequency range due to mechanical limitations. These can include diaphragm breakup modes, voice coil inductance, and sample-to-sample variation. While personalization algorithms function within these constraints, extended frequency capability means they can address a broader spectrum of perceptual cues.

"Extending the usable frequency range is not just about adding more sound, but about preserving intent. When personalization algorithms apply fine spectral and temporal adjustments, a wider and more linear playback bandwidth allows those changes to remain perceptually distinct, enabling listeners to clearly perceive improvements in clarity, separation, and spatial detail.," said Pierce Hening, Acoustic Engineer at xMEMS Labs.

From technical foundation to real-world application

This validated hardware–software synergy enables tangible opportunities across a range of emerging product categories and use cases, including:

High-Fidelity Audio

For brands committed to true high-fidelity performance, and serving their customers who expect every algorithmic adjustment to be perceptually transparent, the study shows that extended-frequency hardware significantly enhances the perceptual impact of intelligent audio processing.

Just as importantly, listener preference data, not just technical specifications, demonstrates the value of pursuing extended-range transducers.

Open-Ear Audio & Smart Eyewear

Open-ear form factors, whether bone conduction headphones, clip-on designs, or smart eyewear, introduce a consistent technical challenge: without a physical seal, both transducers and microphones are exposed to environmental noise, raising perceptual threshold for both audio content and real-world conversations.

xMEMS’s extended high frequency capability combined with stable impulse response becomes particularly important in these contexts. Advanced signal processing applications - AI-driven speech enhancement, signal augmentation for open-ear acoustics, spatial audio rendering - rely on accurate reproduction across the full frequency spectrum. When high-frequency information is rolled-off or distorted, these algorithms lose critical perceptual cues needed to separate speech from noise, localize sound sources, or render spatial effects convincingly.

As open-ear form factors continue to proliferate, a strong technical foundation for intelligent, adaptive audio becomes increasingly critical.

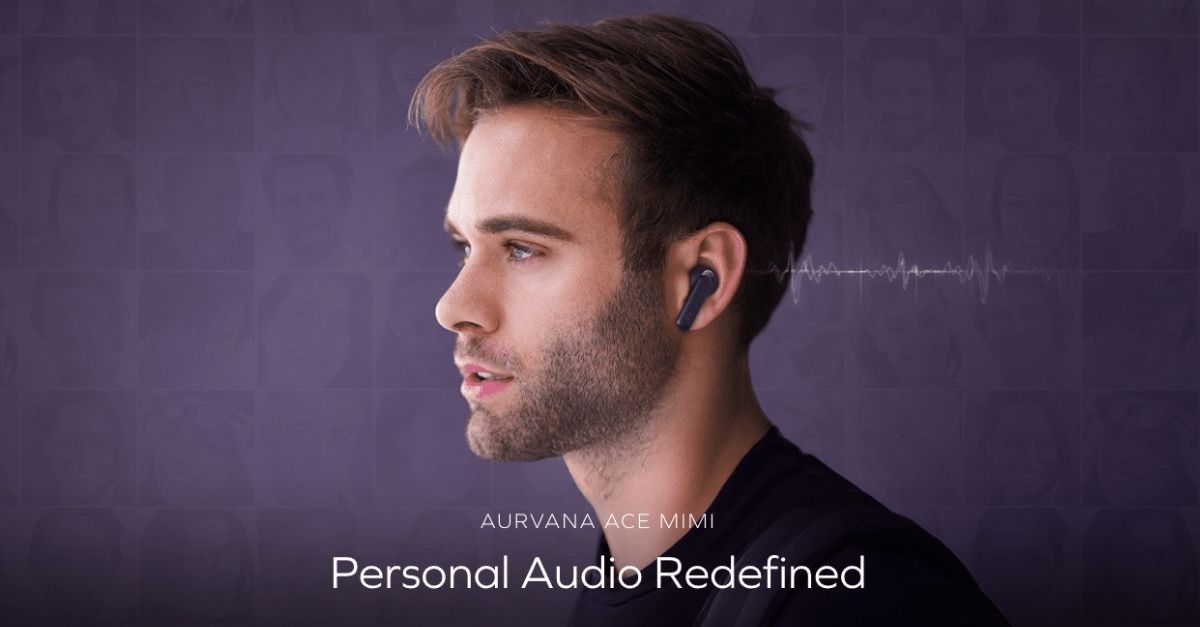

Proven commercial viability

2025 kicked off with the launch of the Creative Aurvana Ace Mimi TWS earbuds at CES in January, followed by the Creative Aurvana Ace 3 in October. Both models feature xMEMS drivers paired with Mimi Sound Personalization, a combination that quickly became a focal point in reviewer feedback.

The Aurvana Ace Mimi earned five ‘Best of CES’ awards, and was widely praised for delivering advanced features and impressive sound quality at an accessible price point. Reviewers repeatedly called out the xMEMS x Mimi integrations, with TechRadar highlighting the combination as a stand out feature.

The Aurvana Ace 3 has also received critical praise. SoundGuys noted, “The headline feature of the Ace 3 is the combination of xMEMS dual-driver technology with Mimi Sound Personalization”, while News Atlas emphasized the same synergy in its coverage, “Sound-personalized wireless earphones get solid-state help in the highs.”

Together, this recognition and momentum underscore the commercial viability of pairing extended-range hardware with intelligent, personalized sound processing in real-world products.

Exploring the next frontier: hearing intelligence

While this study focused on personalized sound processing, both Mimi and xMEMS are exploring technical frontiers beyond the standard features currently available in the market and working toward a vision where audio devices become truly adaptive to user intent, environmental conditions, and content type.

"We're investigating how this type of hardware-software synergy can enable devices to enhance communication abilities in real-time, adapt dynamically to environmental acoustics, and optimize processing based on what the user is actually doing - whether that's having a conversation, consuming media content, or navigating a noisy environment. These are technical challenges both teams are actively working to solve." said Vinzenz Schönfelder, R&D Lead at Mimi Hearing Technologies.

As these new software features develop and evolve, the foundation becomes critical. As processing algorithms like Mimi’s grow more sophisticated and address an expanding range of use cases, the underlying hardware must be capable of accurately reproducing increasingly nuanced algorithmic adjustments across the full frequency spectrum. xMEMS provides this foundation, enabling precise signal reproduction for personalized sound, while establishing a deep integration between transducer performance and processing that supports more complex adaptive behaviors in the future.

For product teams and brand leaders, it is no longer just about delivering better sound today, it is about selecting a foundation that supports long-term competitive advantage. Transducer selection directly influences not only how personalization performs, but which adaptive capabilities will be feasible tomorrow and how far these capabilities can evolve across a product’s lifecycle.

Conclusion

This study reveals more than personalization performance, it highlights the technical foundation required for consumer electronics devices to truly adapt and deliver optimal listening experiences across the full frequency spectrum.

As audio processing moves beyond static tuning and toward real-time responsiveness based on user intent, environmental conditions, and content type, the role of hardware shifts from passive output to active enabler of intelligent hearing technology.

By combining xMEMS drivers with Mimi processing, brands gain a strategic advantage: the ability to deliver differentiated, adaptive audio features while scaling premium user experiences across multiple use cases. As innovative audio features continue to be a key differentiator, competitive advantage will belong to products built on foundations designed to adapt, evolve, and scale.

Meet the teams at CES 2026

xMEMS and Mimi will demonstrate their offerings at CES 2026 showing how extended frequency range amplifies personalization impact.

Complete study findings

The complete study findings, including detailed MUSHRA methodology, frequency response measurements, and listener feedback analysis, will be published in Q1 2026.